Stanford CS236: Deep Generative Models I 2023 I Lecture 16 - Score Based Diffusion Models

()

Score-based models

- Score-based models estimate the gradient of the log-likelihood (score) of a data distribution using a neural network.

- Denoising score matching is an efficient way to estimate the score of a noise-perturbed data distribution by training a model to denoise the data.

- Score-based models can be seen as the limit of infinite noise levels.

Diffusion models

- Diffusion models can be interpreted as a type of variational autoencoder, where the score function acts as the encoder and the denoising process acts as the decoder.

- Diffusion models can be converted into ordinary differential equations (ODEs), allowing for exact likelihood computation and efficient sampling methods.

- Controllable generation in diffusion models can be achieved by incorporating additional information or side information into the model.

- Diffusion models are a type of generative model that can generate realistic-looking images.

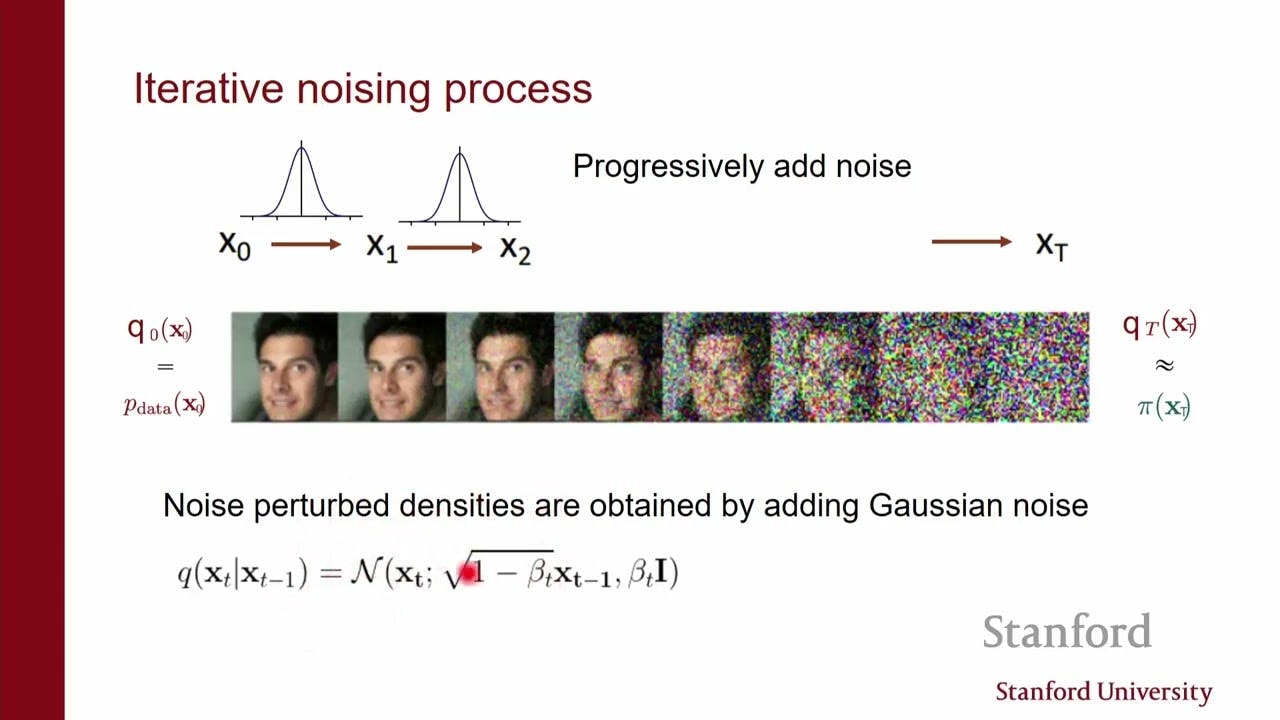

- They work by gradually adding noise to an image until it becomes completely random, and then gradually removing the noise to generate a new image.

- The training objective of a diffusion model is to maximize the evidence lower bound, which is a measure of how well the model can reconstruct the original image.

- The encoder in a diffusion model is fixed and simply adds noise to the image, while the decoder is a neural network that learns to remove the noise.

- The loss function for a diffusion model is the same as the denoising score matching loss, which means that the model is learning to estimate the scores of the noise-perturbed data distributions.

- The sampling procedure for a diffusion model is similar to the Langevin dynamics used in score-based models, but with different scalings of the noise.

- Traditional diffusion models use a discrete number of steps to add noise, but a continuous-time diffusion process can be described using a stochastic differential equation.

- The reverse process of going from noise to data can also be described using a stochastic differential equation, and the solution to this equation can be used to generate data.

- The score function is a key component of the stochastic differential equation, and it can be estimated using score matching.

- In practice, continuous-time diffusion models can be implemented by discretizing the stochastic differential equation and using numerical solvers to solve it.

- Score-based models attempt to correct numerical errors in diffusion models by running Langevin dynamics for a time step.

- DDPM is a predictor type of discretization of the underlying stochastic differential equation, while score-based models are corrector type.

- The diffusion implicit model (DIM) converts the stochastic differential equation into an ordinary differential equation with the same marginals at every time step.

- DIM has two advantages: it can be more efficient and it can be converted into a flow model with exact likelihood evaluation.

Noise Conditional Score Network (NCSN)

- The Noise Conditional Score Network (NCSN) estimates the scores of noise-perturbed data distributions by iteratively reducing the amount of noise in the sample.

- The inverse process of NCSN, which generates samples for the denoising score matching law, involves adding noise to the data at every step until pure noise is reached.

- The process of going from data to noise can be seen as a Markov process where noise is added incrementally, and the joint distribution over the random variables is defined as the product of conditional densities.

- The encoder in NCSN is a simple procedure that maps the original data point to a vector of latent variables by adding noise to it.

- The marginals of the distribution are also Gaussian, and the probability of transitioning from one noise level to another can be computed in closed form.

- NCSN can efficiently generate samples at a specific time step without simulating the whole chain, making it computationally efficient.

- The diffusion process in NCSN is analogous to heat diffusion, where probability mass is spread out over the entire space.

- To invert the NCSN process during inference, several conditions need to be met, including the ability to smooth out the structure of the data distribution to facilitate sampling.

- The goal is to learn a probabilistic model that can generate data by inverting a process that destroys structure and adds noise to the data.

- The process of adding noise is defined by a transition kernel that spreads out the probability mass in a controllable way, such as Gaussian noise.

- The key idea is to learn an approximation of the reverse kernel that removes noise from a sample, which can be done variationally through a neural network.

- The generative distribution is defined by sampling from a simple prior and then sampling from the conditional distributions of the remaining variables one at a a time, going from right to left.

- The parameters of the conditional distributions are learned such that the generated samples have low signal-to-noise ratio, essentially reaching a steady state of pure noise.

- Alternatively, Langevin dynamics can be used to generate samples by correcting the mistakes made in the vanilla procedure, which requires more computation.

Training diffusion models

- The encoder in a diffusion model is fixed and simply adds noise to the image, while the decoder is a neural network that learns to remove the noise.

- Fixing the encoder to be a simple noise-adding function simplifies the training process.

- The Lambda parameters control the importance of different noise levels.

- The Beta parameters control how quickly noise is added.

- The architecture is similar to a noise-conditional score model, with a single decoder amortized across different noise levels.

- Training is efficient because the computation can be broken down into smaller, more manageable steps.