Stanford EE274: Data Compression I 2023 I Lecture 9 - Context-based AC & LLM Compression

()

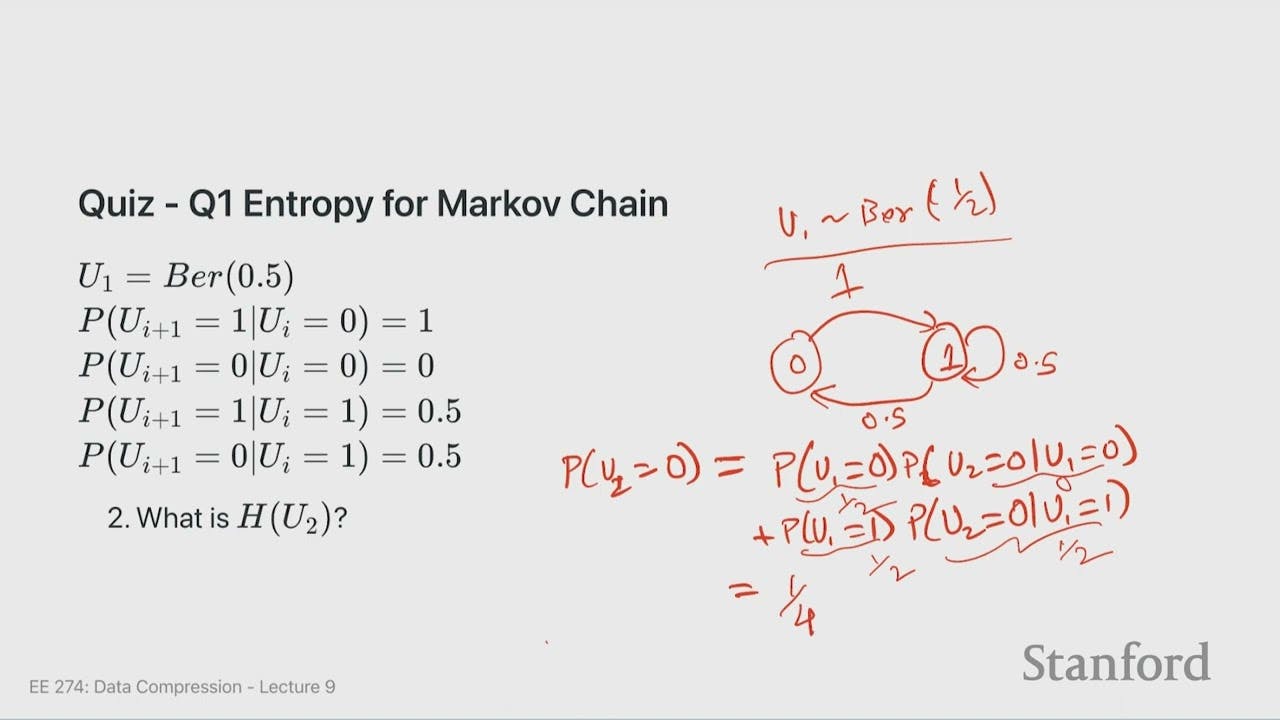

Markov Chains and Entropy Rate

- A recap of key concepts is provided, including Markov chains, stationary processes, conditional entropy, and the entropy rate.

- A given Markov chain is analyzed, and its properties, such as entropy of U1, entropy of U2, stationarity, and entropy rate, are calculated.

- The concept of achieving the entropy rate is introduced, and it is explained that for a first-order Markov source, the entropy rate is equal to the entropy of U2 given U1.

Text Data Compression

- The lecture concludes with a brief introduction to text data compression and an overview of the Lempel-Ziv-Welch (LZW) compression technique, which will be discussed in detail in the next lecture.

Arithmetic Coding

- Arithmetic coding works by dividing intervals into symbols and splitting the sub-interval corresponding to each symbol.

- The length of the interval at the end of the encoding is the product of the probabilities of each symbol.

- The code length for each symbol is roughly the logarithm of the inverse of the interval length.

- Context-based arithmetic coding is a modified version of arithmetic coding that uses the knowledge of the past symbols to split the intervals.

- The expected bits per symbol for context-based arithmetic coding is equal to the conditional entropy, which is the entropy rate in the case of a first-order Markov source.

- The code length for context-based arithmetic coding is the logarithm of the product of the conditional probabilities.

- A better predictor gives higher probabilities, resulting in shorter code lengths.

- Better prediction leads to smaller code length and better compression in arithmetic coding.

- The number of bits used to encode a symbol is approximately the negative logarithm of its probability.

- A good model can predict the next symbol with high probability, resulting in fewer bits required for encoding.

- Providing more context to a good model improves its prediction accuracy and reduces the number of bits needed for encoding.

- Arithmetic decoding is symmetric to arithmetic encoding and requires the same model to split intervals and decode symbols.

- If a model is not available, the empirical distribution of the input data can be used to build a model for compression.

Adaptive Arithmetic Coding

- There are two approaches to modeling: building a model from data before encoding (used in Homework 1) and building an adaptive model as data is processed.

- Adaptive arithmetic coding updates the model at every step based on the data seen so far.

- The decoder needs to update its model accordingly to stay in sync with the encoder.

- It's crucial for the encoder and decoder to share the same state at every step to avoid breakdowns.

- Don't provide zero probability to any symbol, as it can break the arithmetic coder.

- Two-pass encoding learns the model from the entire data and can be parallelized, but requires storing the model in the compressed file and may not be suitable for streaming data.

- Adaptive encoding doesn't need to store the model and is good for streaming, but the initial model is weak and compression may be slightly worse initially.

- Prediction and compression are essentially the same problem, with the cost function for arithmetic coding matching the prediction loss function.

- A good predictor can be used as a compressor, and vice versa, although this can be trickier.

Kth-Order Adaptive Arithmetic Coding

- Kth-order adaptive arithmetic coding aims to learn a k-order model for the data as it's processed.

- Adaptive arithmetic coding is a data compression technique that uses past symbols to predict future symbols.

- The order of the model refers to the number of past symbols used to make predictions.

- Higher-order models can make more accurate predictions but require more memory and can suffer from sparse counts, especially with limited data.

- The example of the Hound of Baskerville was used to compare zeroth, first, second, and third-order adaptive arithmetic coding, as well as gzip and bzip2 compression.

- Higher-order models did not perform as well as expected due to the limited size of the data, which resulted in sparse counts and poor predictions.

- Adaptive arithmetic coding has limitations, including slow performance, exponentially growing memory requirements, and sparse counts for large orders, which can lead to worse performance.

- A two-pass approach using conditional entropy showed promising results, particularly for higher-order models.

- Adaptive K-order modeling involves adjusting the order of the model based on the input data to improve compression efficiency.

- Increasing the order of the model can lead to sparse counts and make it harder to predict symbols accurately.

Advanced Prediction Models

- Advanced prediction models, such as neural network-based models and context mixing models, can achieve better compression but are computationally expensive.

- Recent progress in text compression has surpassed Shannon's estimate of the entropy rate of English using large language models (LLMs) as predictors.

- LLMs are trained as predictors and their loss function is the cross-entropy loss, making them effective compressors.

- Deploying large models as predictors can be practical in certain settings where everyone has access to the same model, such as compressing large amounts of similar data.

- Exploring the limits of compression and experimenting with different models is valuable for understanding text prediction and compression techniques.

- LLMs achieve remarkable compression results, surpassing bzip2 but not as impressive as gzip or arithmetic coding.

- Smaller LLMs perform better with longer contexts, approaching the optimal Shannon bound.

- LLMs struggle with data that differs from their training distribution, such as ancient languages like Pali.

- LLMs perform exceptionally well on data they were trained on, like Sherlock Holmes novels, due to memorization.

- Caution is needed when evaluating compression results to avoid using data from the training set.

- LLMs are currently slow and computationally intensive, but smaller models show promise for practical applications.

- Overfitting can be beneficial for compression, as long as the model is shared for specific datasets.