Stanford EE274: Data Compression I 2023 I Lecture 16 - Learnt Image Compression

()

Traditional Image Compression

- Traditional image compression methods, such as JPEG, use linear transforms (matrix multiplication) and rely on heuristics and hand-determined parameters.

- JPEG 2000, BPG, and other traditional image compressors use linear transforms (matrix multiplication).

Learned Image Compression

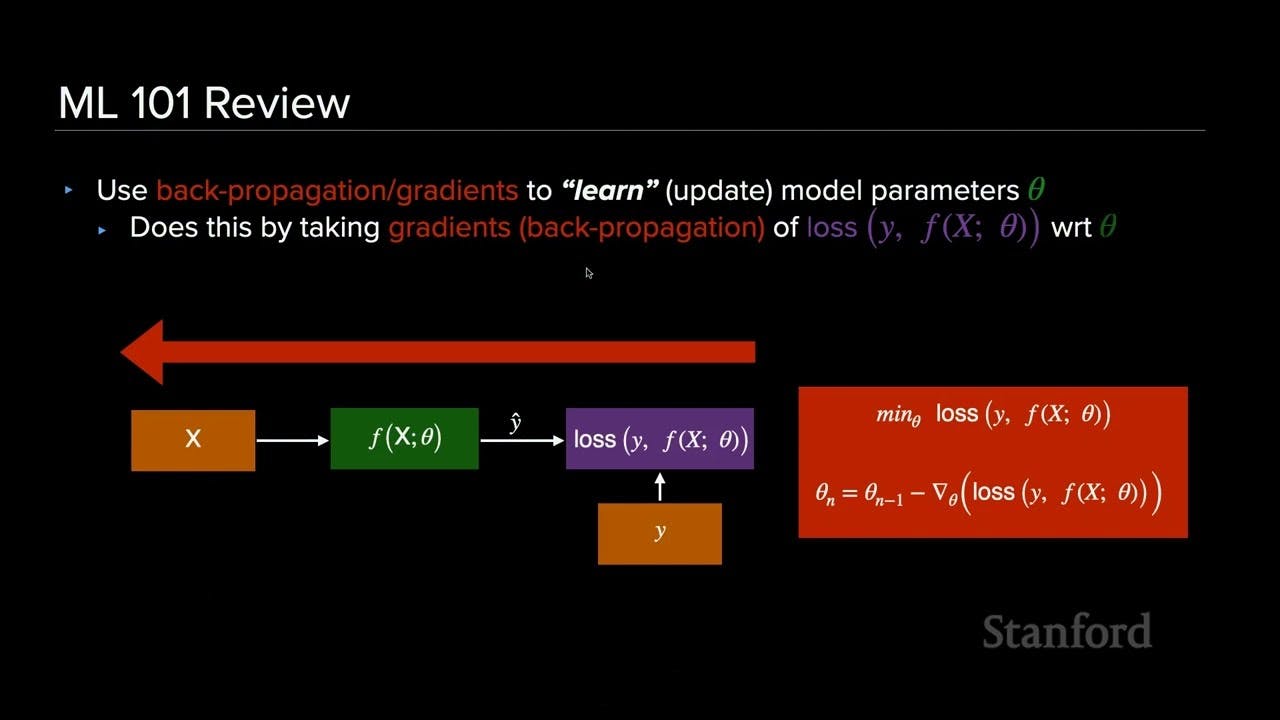

- Learned compression uses machine learning to improve upon traditional compression methods by employing nonlinear transforms and end-to-end training.

- Learned compression aims to optimize the entire compression pipeline, including the transform, quantization, and entropy coding, in an end-to-end manner.

- Learned image compressors can adaptively learn parameters for specific domains, tasks, or even content, providing flexibility and improved performance.

- Learned compressors offer better distortion model separation, allowing for optimization of any differentiable distortion metric, including those that better align with human perception.

- Learned image compressors have achieved state-of-the-art performance, surpassing traditional codecs in terms of quality at lower bit rates.

Advantages of Learned Compression over Traditional Compression

- ML-based codecs offer better fidelity to chosen probability models, resulting in improved rate distortion.

- ML-based codecs allow users to choose any differentiable distortion, making them highly adaptable and flexible.

Challenges of Learned Compression

- One of the main challenges with learned image compressors is their speed, as they require training with millions of parameters, which can be computationally expensive.

- The main challenge in deploying ML-based codecs is their computational complexity, which can be 20 times more than traditional codecs.

- Different hardware platforms may have different floating-point operation implementations, leading to potential failures in reproducibility across machines and platforms.

Autoencoders for Image Compression

- Autoencoders consist of an encoder and a decoder.

- The encoder analyzes the input image and reduces it to a lower-dimensional representation (latent space).

- The decoder synthesizes an image from the latent space.

- The goal of training an autoencoder is to minimize the reconstruction loss between the original image and the reconstructed image.

- To achieve rate-distortion optimization, a rate loss term (estimated using the probability distribution of the latent space) and a distortion loss term (L1 loss) are combined in the loss function.

- During training, noise is added to the latent space to approximate the quantization step.

- After training, an arithmetic encoder is used for lossless compression of the latent space.

- The resulting compressor achieves good reconstruction quality with low bit rates.

Future of Learned Image Compression

- ML-based codecs are becoming increasingly prevalent and are expected to be widely used in future compressors.