Stanford Seminar - Towards trusted human-centric robot autonomy

()

Safe Control

- Challenges in building trustworthy autonomous systems include safe control, addressing the question of how close is too close when humans and robots interact.

- Different stakeholders make different assumptions about human behavior, leading to different definitions of safety.

- The speaker introduces the concept of a "safety concept" that provides a measure of safety and safe/unsafe controls based on the world state.

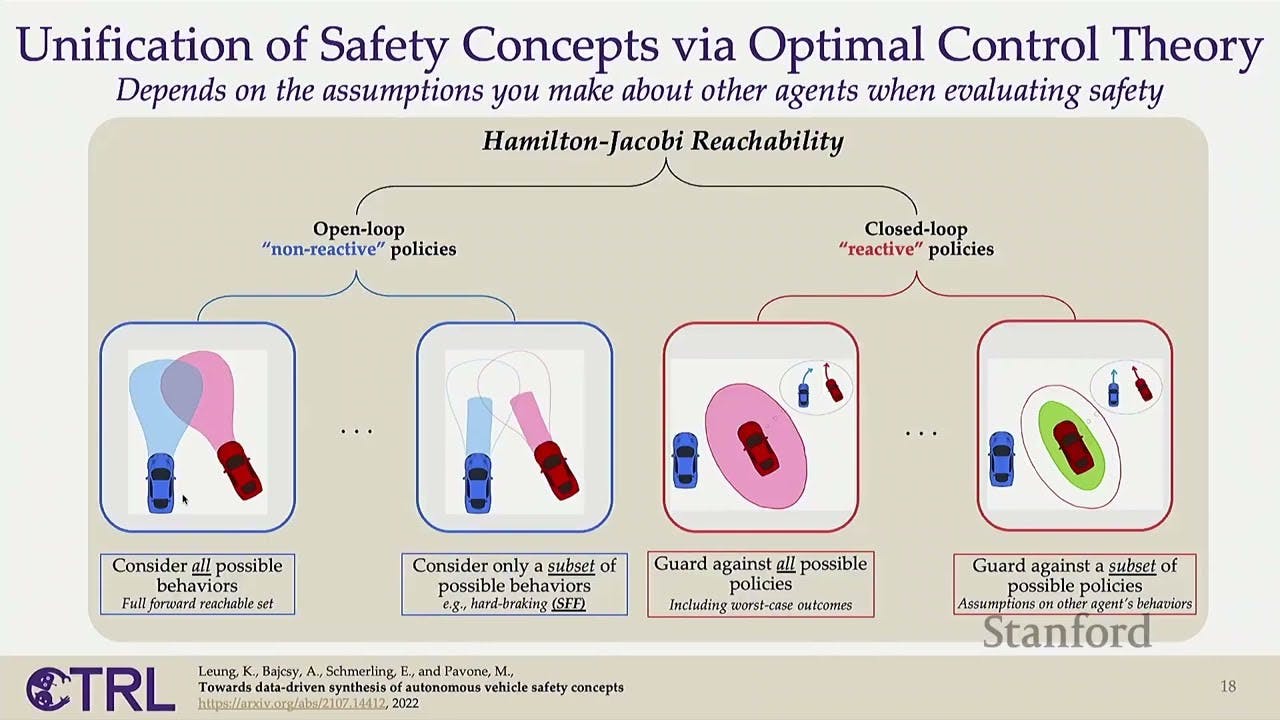

- Various safe control strategies are mentioned, including velocity obstacle, contingency planning, Hamilton-Jacobi reachability, safety force field, and responsibility-sensitive safety.

- The speaker introduces Hamilton-Jacobi reachability, a mathematical framework that captures closed-loop interactions between agents.

- The solution to the Hamilton-Jacobi-Isaacs equation, denoted as V, can be interpreted as a measure of safety, with the goal of maximizing safety and avoiding collisions.

- The challenge lies in making reasonable assumptions about how other agents behave, as overly conservative assumptions can lead to impractical safety sets.

- The speaker proposes learning a state-dependent control set from data, leveraging the assumption that humans tend to take safe controls.

- Control barrier functions are introduced as a way to represent control invariance sets and parameterize the state-dependent control set.

- The learning problem becomes a control barrier function learning problem, which can be solved efficiently using gradient descent.

- By incorporating the learned control set into the Hamilton-Jacobi-Isaacs equation, novel safety concepts can be synthesized that are more practical and data-driven.

Interaction-Aware Planning

- The speaker then presents work on interaction-aware planning to make interactions between humans and robots natural and seamless without explicit communication.

- They argue that humans are self-preserving and will engage in joint collision avoidance, and propose a method for achieving safe and fluent human-robot interactions through simple changes to a standard trajectory optimization problem.

- The speaker introduces the concepts of legibility and proactivity in robot motion and discusses how they can be encoded into a robot planning algorithm.

- They also consider the fact that humans are not completely selfless and introduce the idea of inconvenience to limit the amount of inconvenience that an individual experiences during an interaction.

- The research introduces the concept of a "markup factor" to encourage robots to take proactive and legible actions when avoiding collisions with humans.

- A higher markup factor leads to earlier and faster rotation of the robot, making its intentions more apparent to humans.

- The research also introduces an "inconvenience budget" to limit the amount of inconvenience caused to both the robot and humans during collision avoidance.

- The proposed framework outperforms baseline methods in terms of fluency, fairness, and pro-social interactions.

- The research demonstrates the effectiveness of the proposed framework through simulations and human-in-the-loop experiments.

- The framework can be easily extended to incorporate multiple agents and obstacles, making it suitable for indoor and outdoor navigation.

Ongoing Challenges

- The speaker emphasizes the importance of intentional data collection, focusing on safety-critical scenarios and human responses in those situations.

- They propose using control theory to provide inductive bias for learning methods, rather than applying learning methods for control theory.

- Addressing the curse of dimensionality in reachability methods is crucial for computational methods.

- Testing algorithms with humans in the loop is expensive and challenging, especially in terms of developing performance metrics.